Google BigQuery GIS – Geospatial in the Cloud

Chad Jennings is the GIS lead at Google Cloud as well as being on the product management team for Google’s BigQuery. His mission is to figure out how to innovate geospatial cloud along with the GIS assets that Google “proper” has. Before he joined Google, he’d done several startups. Some went fine, and some ended up as smoldering craters in the ground. The last he was involved in was his entry into the combination of what people called Big Data and geospatial analytics.

What Is BigQuery?

It’s Google Cloud’s enterprise data warehouse. It’s an immensely scalable and quick data storage and processing product that got a geospatial facelift a couple of years ago. Users interact with BigQuery with standard SQL verbs. It feels like a relational database except you can have relations and tables that are petabytes big ̶ but to the user, it’s still just a simple case of typing in SQL. It’s the first cloud data warehouse to have PostGIS lookalike functions that can do geospatial filtering and joins.

Why Has It Taken So Long for GIS to Enter Data Warehousing?

The primary functions of a data warehouse from Google’s point of view are weblogs, transactions, financial history analysis, and things of that nature.

BigQuery was invented to do web log analysis because Google, being Google, had an awful lot of it to crunch through.

There was a big gap there for the product because it wasn’t serving GIS use cases at all. It’s been exciting to act as an advocate for getting engineering resources spun up and finding leads. That’s how the product has grown since 2016 with its launch in 2018. And the rest is history; off to the races since then.

User Interface and Other Integrations to BigQuery GIS

All of Google Cloud products have either Application Programming Interfaces (APIs) or User Interfaces (UIs). Generally speaking, UIs backed by API and BigQuery are no different.

There’s a SQL composition window where you’d type in your commands or copy and paste them from examples that you find on the web. Press Run Query and the engine processes your commands and returns your results. Then you use a variety of different tools to look at the results.

Tools like a connector for QGIS, or Data Studio for business analytics (they just launched support for Google Maps’ base maps). Speaking of slick tools, BigQuery’s Geo Viz will render the results of your query directly on a map with no transforming or transferring data.

For more information on BigQuery Sandbox – documentation video

How Does Data Get into BigQuery?

In two ways.

You can do batch loads of data. There is a command called BigQuery or “bq” load. The loading of the data is free, provided customers are using best practices, which can be found on the web. Check out this video on loading data into BigQuery

There is also a streaming API, which a lot of GIS customers are using for things like industrial IoTs, telematics, and vehicle data, which they stream into BigQuery. We have customers who are doing up to 10 Gb per second per table. That’s a fat pipe coming into the product for data that is typically generated in real-time.

If you have a bunch of geospatial files like shapefiles or GeoJSON and you want to ingest them, we’ve partnered up with Safe software and their powerful FME tool to let you do so. It transforms up to 500 different geospatial data types and materializes them as BigQuery tables. The tool is available in the Google Cloud marketplace with a generous free tier option. If you’ve got 1000 shapefiles you need loading into BigQuery as a table, you can easily do so.

How Big Is Big?

Google typically names products with descriptive names. BigQuery is the BigQuery Engine. Not particularly creative, but it’s descriptive, and it lives up to its name.

Our largest customers have in excess of 250 petabytes in storage with us, and the largest single queries routinely span several petabytes—all in the space of as little as a couple of minutes. Using partitioning and clustering, we can get queries over petabyte tables to run in just a few seconds.

Does Google Talk About Big Data?

We just call it data because it’s all big, and it’s just getting bigger. Customers who are processing smaller numbers of terabytes or gigabytes are finding that their data needs grow every month. We’ve given up on the Big Data term and just call it data.

The scale that BigQuery unlocks for users is mind-boggling. One of our product managers was giving a talk to a group of customers explaining that users with 250 petabytes were getting the query engine to scan 5.2 petabytes in one query (the largest single query at the time).

One of the more adventurous members of the audience decided to put this to the test as the talk went on, and he copied a five petabyte table three times and appended it to itself, creating a monster 17 petabyte table. He then ran a query on it and broke the product manager’s record for everyone to see right there and then.

Are Customers Running Queries Simultaneously?

Yes. BigQuery is a multi-tenant system.

Google has vast data centers with millions of computers in them. BigQuery, as a product, buys capacity from Google and reserves that. When you onboard as a customer, you get access to the capacity you’ve purchased. Your query will run on a machine. When it’s done, it clears its cache, and my query can run on the same machine. The compute serving multi-tenant systems (like Gmail, search, or YouTube) is ephemeral in these machines. Google has job allocation servers; it puts a job on a machine, and when it’s done, the machine is available for someone else, maybe even from a different part of the company.

Customers like to be assured and know that Google excels at managing and putting your data into a computer to run a process and then flushing it so that someone else can use it. It’s your data, Google isn’t sharing it, even if it’s a free tier version of BigQuery you’re using. The privacy aspect of using a scalable, multi-tenant system is essential for users to take away.

The Simplicity of the GIS Cloud

As a user, you load your data, write your query, and press the Run Query button. Your data could be petabytes, but you don’t have to worry about scaling the resources behind it. That’s the value proposition to the BigQuery user experience.

When you talk about cloud GIS, it’s a multi-dimensional question, no pun intended. You’ve got tabular analytics, or vectors, as GIS professionals would call them. Database people come to this conversation and want to talk tables. So, there’s tabular analytics, injury analytics, as well as data and compute assets. When you start unlocking the capacity for tabular analytics, you start enabling users to do stuff that they simply couldn’t do before.

One of our customers uses image analytics in conjunction with tabular analytics to do worldwide food production analysis. They use Google Earth Engine. Within that, they can do image recognition or process Landsat imagery and identify from the spectral signature in the image what a particular parcel of land is growing.

They pull out what crop and how many hectares of it is being grown for areas, say all of Argentina, South America, or Europe. It’s analysis on a colossal scale. Armed with a table of polygons and coordinates for the different crops, they combine it with the weather data and machine learning model from BigQuery to predict how much soybeans, say Ukraine, will produce in 2021 based on 2020’s production environment.

Cloud GIS is a multi-dimensional geo analysis capability that’s unfettered from traditional scale blockers so that businesses can do global analyses that scale whereas before they’d have to do it for a neighborhood at a time.

It’s a cloud that doesn’t require a huge leap for the user. It’s using Google products together seamlessly so that people don’t even notice they’re using multiple products. Cloud GIS is at an earlier stage of its journey than GSuite is, but that’s where we want to be going.

A Specialized Use Case

The mission of the Global Fishing Watch is to help countries identify bad behavior at sea. They look at the sentinel radar imagery from inside Google Earth Engine and find the radar returns for every single ship in the ocean. You can’t hide your ship from a satellite radar if you’re a bad guy. All good guys on the water broadcast something called AIS the automatic identification system where ships broadcast their latitude, longitude, identifier, and heading velocity.

Global Fishing Watch does a massive diff operation between the radar imagery and the AIS imagery to find the dark fleet of people present in the radar but not present in the AIS data. Those folks are potentially up to no good because they should be broadcasting, and they’re not.

Some nefarious actors have already been brought to justice this way. Some of this analysis was able to locate and identify human trafficking and piracy out in Indonesia, as reported by Associated Press.

How?

Global Fishing Watch used a radar imagery analysis in Google Earth Engine, exported that to BigQuery, did a diff operation with the AIS data inside BigQuery, which resulted in a list for people to go and check in on.

What about a GIS Use Case?

The primary use case for BigQuery GIS is filtering and doing geospatial joins on polygons and points that are stored inside it.

A number of our customers use it for traffic analysis. They record position, speed, and acceleration so they can identify hazardous driving behaviors. Their fleet of sensors is commercial vehicles. FedEx and UPS all hire telematics companies to collect data on their delivery vehicles and then report them back to the company. You can infer what global traffic is like from this sample because of the many delivery vehicles out there developing those kinds of insights.

Lots of heavy breaking in a particular spot in adverse weather conditions? That probably means the corner of 5th and Elm is a problem, and the City Planner should put a stop sign there. This would be an example of a more traditional use case.

BigQuery and Astronomy?

There is a use case for that, too. It’s a cocktail party-worthy one.

All coordinates astronomers use to map the stars are the same as the coordinates navigation engineers or geospatial analysts use to map points on Earth. Latitude and longitude are exactly analogous to right ascension and declination, which are the angular measures astronomers use to map out where the various stars are.

One of our Google solution architects mapped a whole bunch ̶ and by whole, I mean terabytes worth ̶ of radio astronomy data into BigQuery GIS. He pretended that right ascension is longitude, and declination is latitude. He made those variable swaps, and all of the PostGIS syntax verbs worked. He was able to do clustering and map new radio phenomena doing that. Terrestrial coordinates that were developed in the 1600s or 1700s can now be applied to astrophysics. It’s mind-blowing, and you can read more about it in the article The Stars and BigQuery.

What’s BigQuery Not For?

It’s not for storing and processing unstructured data. That said, some people play around with it doing raster analysis or render and process SAR data inside of BigQuery, but that’s not where it’s optimized.

BigQuery’s sweet spot is latitudes, longitudes, line strings, and polygons; these are what our geospatial data types focus on.

If you’ve got imagery analysis or unstructured data, you can still have a look, but BigQuery may not be the best one out there for you. For imagery analysis in conjunction with BigQuery, you can use one of our sister tools like Google Earth Engine or Descartes Labs, just to name two big ones.

What’s the Impact of All This for Geospatial Cloud Analytics?

Geospatial analysis, and data analysis, in general, have been hindered by the lack of ability to scale for years. A decade ago, people were creating data lakes ̶ pouring in data, which was too valuable to throw away but inexpensive to store, so they kept the data without knowing what they would do with it.

These data lakes are still there; nobody’s been swimming in them. Ten years of terabytes and petabytes turning into data swamps where no one waded through. With the new tools we have, we can start hunting around this legacy data efficiently and find out if there are gems there or if we can get rid of it.

In the future, with lower costs for collecting, streaming, storing, and processing data, people will be able to make applications and analyses that leverage data more efficiently, just like the example we’ve talked about for food production mapping and forecasting. It’s going to take the brakes off for a lot of businesses.

Is the Next Big Thing Moving The Code to The Data?

That’s one of the trends of our technical lifetime, yes.

The other one is moving the compute to where the data is instead of pushing the data around. If you look at any Gartner or Forrester report, you’ll see that zettabytes of data are coming your way. I barely even know what a zettabyte is. It’s almost comical until you’re suffering from the fact that your data is too big for your infrastructure.

We’ll definitely get to the point where, in the same way people use online documents and share links to a document instead of sending actual files, we will share links or access to a data set rather than putting it onto a USB stick.

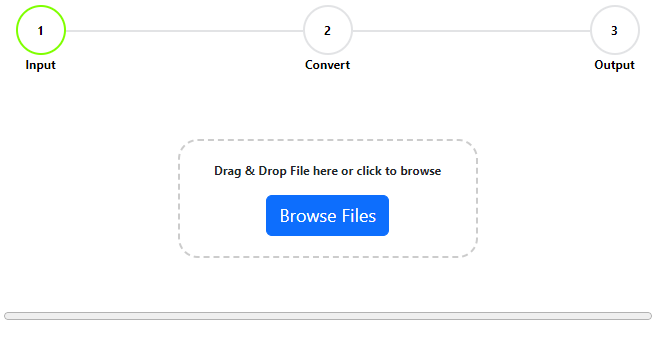

Many companies, including Google, are working hard to make the technical level necessary to interact with these tools lower and lower. Such as the drag and drop tools like the ingest tool we talked about before FME. It’s drag and drop ̶ you create data pipelines by dragging boxes, clicking on parameters, and pressing go. You don’t have to specify what the servers look like. These tools are approaching no code, or low code, which means GIS practitioners can unleash creativity, and data is easier to share. Millions are using these tools and unlocking their creativity ̶ this is the most fun part of my job; to see what people do with the tools I make.

GIS Utopia vs. the Competitive World

In GIS utopia, everyone has access to data with all the necessary skills getting lower. People unlock the full scope of human creative potential, and we solve all of our problems. It’s lovely there.

However, over in the competitive world, not everyone wants to share their data with everybody else. Some of it might be precious and their only competitive advantage. It needs to have security controls on it, and only the right people can access it.

As much as we love talking about utopia, real-world people need to protect their intellectual property and livelihood. A lot of that lies in the data and the results of the analysis that are also data.

Let’s aim to be hopeful, optimistic, and altruistic while protecting what we’ve developed.

Are you heading over to BigQuery GIS right now to see what it can do for you? I hope so. You can always ping Chad on Twitter and join the community there or see tutorials and lessons people are putting out for others to benefit from. Let me know if you find something interesting.

Frequently Asked Questions

Is Google a GIS?

Google itself is not a GIS but tools Google Earth Engine, Google Maps, Google Earth, and of course Google Bigquery are Geographic Information Systems

What is BigQuery not good for?

Google’s Bigquery is not designed for processing image data or data that is nontabular. In order to process these kinds of data in Bigquery, they must first be translated to a table.

Is BigQuery better than SQL?

BigQuery is not better or worst than SQL! SQL ( Structured Query Language ) is a standardized programming language that is used to manage relational databases and perform various operations on the data in them. BigQuery uses SQL as a standardized way of allowing users to access and manipulate the data within it.

What is the use of BigQuery?

The best use case for BigQuery is manipulating massive amounts of geospatial tabular data