Microsoft’s Planetary Computer

Our guest today is Rob Emanuele, a Geospatial Architect at Microsoft who is helping build their Planetary Computer. Beginning his journey with a Mathematics degree from Rutgers, he rose through the ranks of several computer engineering roles before working on the Geotrellis, and Raster Vision projects for many years with Azavea. This exposed him to the hands-on application of big raster data solutions with the Earth’s systems. He continues to support his open source roots by carrying the same group effort mentality over to the Planetary Computer project with Microsoft.

What is the Planetary Computer?

The Planetary Computer is a highly modular geospatial project by Microsoft that allows users to query, access, and analyze environmental data at global scales, taking advantage of cloud and blob streaming technology. In a space where many people still download data for their study area, it is exciting to see such an investment into heavy-duty GIS cloud technology. Cloud efficiency and power means unprecedented processing capabilities, allowing the Planetary Computer to help answer some of the world’s biggest questions.

From the beginning,

the Planetary Computer has been designed to integrate with open source elements, and to be worked into existing environments, especially Azure.

This open source integration is facilitated through tools in the Planetary Computer Hub. APIs are available through the Hub, as well as standalone, to promote easier querying of the many datasets being hosted in a consistent and efficient way. Combined with analysis ready data formats hosted by Microsoft, there is a lot of power at the user’s disposal.

The Planetary Computer hosts data from Landsat, Sentinel, ASTER, ALOS, GOES-R, NAIP, and many more geospatial data providers. These data are organized and made interoperable via their metadata. The project utilizes the Spatiotemporal Asset Catalog (STAC) to scrape these massive amounts of data for the information needed to integrate them with each other and promote a seamless use of data. Microsoft, however, does their best to leave these data true to form, recognizing the scientific value and meaning imparted by the preparation from their original sources.

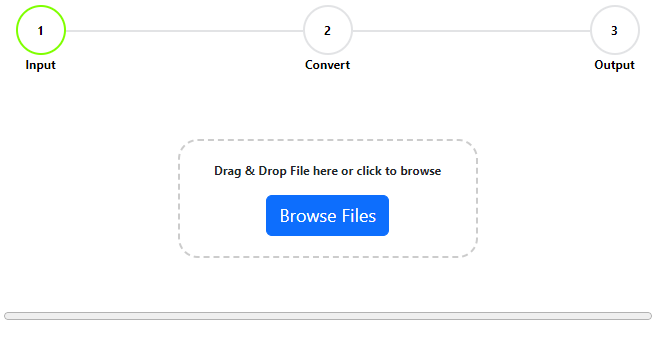

Accessing and utilizing the tools, data, and services associated with the Planetary Computer is free. In order to get started, one must apply to participate in the preview, or beta program, as

not every component of the project is fully publicly available yet.

Once approved, you can generate an anonymous token which provides access to the data catalog, and can then be regenerated once it has expired.

The Components of the Planetary Computer

The immense scale of the data behind the Planetary Computer requires an equally flexible and scalable architecture to facilitate the flow necessary to accomplish the massive workflows it was designed for. Naturally, Microsoft is prepared for this due to their vast experiences gained through Azure, their cloud computing, data storage, and analysis platform.

The four components which make up the Planetary Computer are the Data Catalog, the APIs, the Hub, and the Applications built on top of the platform.

The Data Catalog contains petabytes of imagery, sourced from their original providers, then hosted together on the Azure cloud. The APIs take advantage of the standardized metadata produced through STAC to facilitate querying data of interest. The Hub is an environment, deployed via JupyterHub and preloaded with popular geospatial tools, allowing scientists to get to work quickly. The associated Applications are created by third parties and built on top of Planetary Computer resources. Combined, these components can attain the trifecta of hosting, searching, and computing data.

One of the ways Microsoft has been able to strategize for success with such a massive project, is being able to store the data immediately next to where it will be processed. This is one of the many benefits of having enormous data stores and servers at your disposal, as well as the resources to integrate Kubernetes clusters. The close physical proximity means the most cumbersome part of the process is the user’s get request.

In terms of how the services are being delivered, cloud optimized GeoTIFFs (COGs) are the most common standard. Data, especially weather data, can also take the form of cloud optimized NetCDFs, HDFs, and Zarr data. These N-dimensional array formats are easier to “chunk” into more manageable sections. The ability to break up binary large object (BLOB) data is essential for efficiently streaming it. Partitioning the data, and describing it well via its metadata, allows APIs to index, query, and stream just the most relevant bits of data to the analysis, rather than pulling everything like we see with traditional downloads.

The components for the Planetary Computer are available on GitHub.

The Planetary Computer’s History and Future

The Planetary Computer did not come purely from Microsoft’s goodwill. They approached the beginnings of this project by acknowledging that,

In order for Microsoft to do well, the Earth must do well.

The threats of climate change, loss of biodiversity, and many other of the world’s problems would be bad for business. In an effort to set everyone up for success, Microsoft decided to leverage its resources to create an ultimate resource for earth scientists by pulling everything they need for interpretation into one place.

An all encompassing imagery analysis hub is not a new concept. Google Earth Engine notably was first on the scene. Esri’s imagery services and products have also been around for sometime in the pay to play realm.

One of the biggest shifts with the Planetary Computer will be the ability to keep costs low, to non-existent

for the full data pipeline with conscious integration into existing systems. Although they are very much competing for the same market, Google Earth Engine and the Planetary Computer have a collaborative and friendly relationship. Ultimately, the goal is to better the planet, and no one achieves such a task alone.

In the meantime, there are still obstacles to overcome before the Planetary Computer (note, another PC by Microsoft) will be fully up and running. STAC has done fantastic work in standardizing data categorization by utilizing a common JSON format for metadata, but data fusion is still a tricky and intensive process to sort out. Sensors from different manufacturers, and varied time intervals and resolutions provide challenges for true interoperability of data.

In the future, once the kinks have been worked out of the larger system,

There is tremendous potential for artificial intelligence applications.

We may see pre-packaged highly specialized models that users can run on open data, and seamlessly host and display the results in the cloud. In fact, we have already seen some of this with the Microsoft Building Footprints dataset. There are many industries that have questions about the world, and with time, we may find it is no longer on the distant horizon, but rather at our fingertips to solve them.

FAQ’s

Is Microsoft planetary computer free?

Yes! access to the data is free. You can browse the data catalog and access the data and use the data in your own environment for free. Because the data is stored in cloud-native formats you can stream the data into your own environment and Microsoft provides this service for free. However, if you are looking to process large amounts of data, streaming will quickly become a bottleneck. This is why the Planetary Computer has a “Hub” which is a computing environment that is preloaded with popular geospatial tools, there is a cost involved with using the computing resources in this environment.

Microsoft Earth

Microsoft does not make a version of Google Earth, If you are searching for this term you might actually be looking for The Microsoft Planertry Computer or Microsofts AI for Earth Programme which has been discontinued.