Our guest today is Matt Pietryszyn, the CEO and co-founder of Qwhery, a company looking to use smart devices to streamline interactions between citizens and their municipalities. After years in the public GIS sector, Patrician recognized a strain on 311 resources and wanted to help. As a tinkerer with a skillset in developing open data solutions, his response was Q11, a smart speaker application that is looking to improve ease of access to location information for everyday questions.

Voice Assistants and Spatial Applications

In the modern world, voice technology is becoming more and more commonplace. In 2019, 20% of Google searches were voice queries and in general, a hefty share of searched questions are users looking for information on things in their local area. To complement this, over one-third of Americans now own a smart speaker. Understanding the current landscape, it is unsurprising that some innovators are looking to give the people what they want, a smart speaker compatible application that will answer their spatial questions.

Smart speakers, such as Amazon’s Alexa, and Google Home, are interactive speakers that allow users to control their smart homes. This largely involves tasks such as turning lights on or off, playing music, or telling a silly joke on demand.

These platforms also offer app stores with custom libraries of functions for users to take advantage of. Amazon’s applications are called skills, where Google’s are called actions. Independent companies and developers can contribute to these app stores to add even more targeted functionality.

Although there are already a plethora of skills and actions on the market, smart voice assistance is still a very under explored space. Spatial queries in particular leave a lot to be desired, but hold a great amount of potential.

As smart devices store a user profile of home address, phone number, email, and a number of other preferences, the figurative distance to be traveled to start answering people’s spatial questions is shorter than one might think.

The trick lies in translating how humans ask questions, to how computers understand questions, then encouraging humans to continue to take advantage of this emerging technology as new capabilities are released. A significant part of this is porting web page based applications to voice based interactions.

Where a web map may be the driving force behind answering a user’s question, that simply cannot be communicated in the same way strictly through words. This requires rethinking how people interact with spatial information at its core.

How Do Voice Skills and Actions Work?

As everyone here may know, humans and computers speak different languages. Computer scientists have spent a long time navigating how to better translate between the two, and now we benefit from an extensive catalog of options. The people over at Q11 in particular are focused on adding geographical questions to this growing dictionary to allow you to ask “Alexa, when will my garbage be picked up?” or “Hey Google, what is going on downtown tonight?” and get back a quick and useful result.

Accomplishing this task is simple in theory, but takes time to implement correctly. The general workflow involves first converting the user’s question to a query, pushing it to the appropriate municipality’s API and backend, then returning an understandable response to the user.

Converting user questions into actionable queries has been the mission of smart speakers from the beginning. The process, called natural language processing (NLP) involves converting the spoken word into text, then extracting information from that text to build a query or command. The phrases people use for requests are called ‘utterances’.

Lists of synonyms are created for the words used in utterances. These lists are expanded in order to help train the program for the many language variations that the application will likely encounter from the public.

While applications like Q11 will respond to specific requests, for example “Alexa, what construction projects are there within 2 miles of me?”, it is also engineered to create queries from more general questions like “Hey Google, what construction projects are there near my house?”.

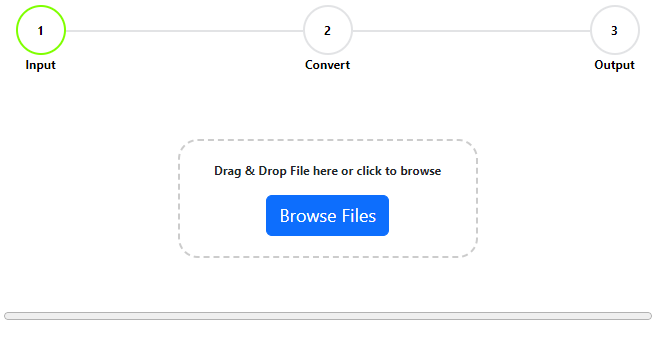

Once the utterance has become a query, it gets fed into the relevant locality’s API. As there is no set standard for how GIS services are administered, stored, and accessed, it is necessary for this API setup to be done manually using Qwhery.cloud by the municipality’s GIS or IT department. This allows them to take advantage of the potential features of the Q11 application, as long as they have a feature service available that matches the user’s needs.

Considering the discontinuity between various governments’s backend GIS systems, it is unlikely that we will see Amazon or Google themselves swoop in with their own custom spatial skills and actions.

It is simply more efficient for each organization to integrate their own APIs, REST endpoints, and services. When the localities themselves are involved in this step, it also allows them to better regionally adapt their queries and outputs. Where “nearby” may mean 10 minutes in a small town, it may mean an hour in a larger city.

The Future of Spatial Voice Querying

Voice assistants and artificial intelligence in general have come a long way very quickly, but of course, there is still ground to cover. We have already seen next gen features incorporated, like Q11 notifying users when the garbage truck should be expected, but there are both technical, and social challenges in the way of future uses of geospatial voice apps.

A broad goal that GIS departments have in implementing tools like voice and web applications is to reduce calls they get to 311 centers, and to generally produce better informed citizens. This mission began with geospatial web apps, and the issues began with promoting awareness of these resources to the target audience.

Geospatial voice tasks and actions face the same obscurity problem. If people are not aware they can ask Alexa what the zoning limitations of their property are, they probably will not think to. By the time they have researched the answer to their question, they may not have the need to ask it again in the future. This conundrum is why developers want to facilitate reusable content – questions that need to be asked over and over again. This produces returning customers who may be more willing to invest the time to explore additional capabilities.

A huge market for potential use of spatially-aware voice apps is commercial applications. A user might be able to ask “Alexa, what is there to do downtown tonight?” and get a number of events to attend. These can be sourced from the municipality’s Economic Development office, or even go down the path of sponsored messages.

Further opportunities in the private sector lie in integrations with things like the Yelp API, where a user could ask for recommendations of where to find the best pizza in their city or neighborhood.

Once the word gets out on the possibilities behind spatial voice queries, there will be no looking back.