Spatial SQL – GIS without the GIS

Paul is a returning guest to the show. Last time, in one of our most downloaded episodes, he talked about vector tiles and how to generate them directly from a Postgres database. Today he’s talking about SQL, the Standard Querying Language, and why geospatial users should know it and use it.

Paul Ramsay is the co-founder of PostGIS —an extension to the Postgres database delivering additional spatial functionality to it. He’s also an executive geospatial engineer at Crunchy Data —an open source support company around the Postgres ecosystem.

WHAT IS SQL?

It’s a structured query language. Putting it into terms of the history of languages, it’s a language to query language. It’s what computer scientists call a fourth-generation language.

First-generation language was writing the ones in zeros, as we can see on pictures from the ’40s with plugboards and connecting wires between ports in giant computer rooms.

Second-generation languages distracted that so you could write in simple mnemonics. Assembly languages are second generation.

Third-generation languages are the ones we work in today. They’ve been distracted away from the machine and have a common idea of variables and loops in branching structures.

Fourth-generation languages take away the idea that you have to reason about how the computing is done and focus more on specifying what you want out of a given computation. They let the computer figure out the problem of how to generate the result based on the input.

SQL is often more challenging for people to get into who come from third-generation languages. They are used to thinking in terms of iterators and branching structures. In SQL, you step back and let the computer do the work. You define what you want in terms of columns and conditions, or matching columns to another set under certain conditions. The computer figures out the right order of iterating on different rows and columns and what indexes to use to join things up.

Indexes are delegated away from the query writer and handed to the computer to figure out how to do them most effectively.

SQL is a standardized version of a query language. Before it, every relational database had its own query language. Oracle was the first big proponent of SQL as a standard. Other database companies came along in the mid-80s. They labeled it as the language to use for doing queries against relational databases.

It’s been with us ever since.

It’s older than the languages people use today, which is interesting because it’s as relevant today as when it was invented, unlike COBOL or Fortran. Those were at their prime when SQL was embedded.

HOW HAS SQL STOOD THE TEST OF TIME SO SUCCESSFULLY?

In any real system, it all comes down to data, the underlying flexibility and long-term utility of the relational model.

The concept of the relational model was invented in the mid-60s. Still, it didn’t become effective until computing caught up with it in the late 70s. The model of relations of rows and columns that you can reason with logically turned out to be robust and well suited to the problems we saw with computers. It later transpired to be useful for business and data problems, too.

Then it took a while again before computing caught up with more complicated problems, like neural networks and graphs that don’t map to the relational model. The number of domains you can map into a relational model cleanly and effectively is astounding. The model was so flexible that once the language on top of it was standardized, it just went along for the ride. If you have a subset and relationship between data sets and you want a result, that’s been bolted on top of things which aren’t relational databases, it remains a useful way of expressing it.

If you’re in Big Data with Hadoop and you’re writing your queries in Hive. It merely converts the SQL into Hadoop processing commands. Much the same way the under the cover SQL engine in Postgres produces an execution tree.

SHOULD WE BOTHER LEARNING SQL?

If you want your career to progress beyond abstractions provided to you by some other programmer, you will want to learn SQL.

It’s one of the core pieces of IT knowledge that’s hard to avoid. Precisely because in any enterprise of larger, the important data will be stored in a relational database. And while there are interfaces that allow you to browse that data, you’re not going to be able to query it effectively or efficiently, unless you can send those queries to the database to execute locally against the data.

CAN WE REPLACE SQL WITH OTHER LANGUAGES?

We can, but only insofar as the data migrates away from the database to some other store, which is implemented in that language.

When you’re using R or Python and pulling data out of a database, they are running SQL behind the covers, executing that SQL in a database, and pulling the result back into the R or Python execution environment. Then you do your extra work there.

The reason there’s efficiency in pushing things back to SQL is that you can cut down on the amount of data that is being pulled out of the database. The reductio ad absurdum is when you’ve got Python and you load table. It slurps the entire table into Python. And then you do a bunch of things with that big in-memory table. And then when you’re done, you save table. It stuffs all the results back into the database.

If the work you’re doing in Python involves nothing more sophisticated than the things you can do in SQL, then you have a less efficient implementation by doing it in “Python.” Your using the database is nothing more than a file system layer. The same is true of R and any language attaching to the database.

Database has enormous advantages in the execution of having its execution layer sitting right next to the storage layer. You can write native Python and R tools which do that. But you have to remember what you’re doing is, as you make your query environment in R or Python more and more sophisticated, you are slowly writing a relational database in R or Python.

WHAT CAN’T I DO WITH SPATIAL SQL IN SOMETHING LIKE POSTGIS?

The key thing missing for a geospatial practitioner from spatial SQL is the ability to see the answers.

Visualization is a huge part of exploratory data analysis. Exploratory analysis is a big part of what geospatial professionals do. Particularly in the early stages of a project, we poke around the data a lot.

You can’t do that in spatial SQL. Not being able to see things is a considerable drawback.

When I’m doing exploratory work, I always end up in QGIS looking at different chloroplast maps, running analyses and SQL, and pulling those back into the visualization environment. You see the same thing with R. It would be a terrible language for doing statistical analysis if you can’t plot the results. You could run regressions, but if you can’t plot the residual plot afterward, it’s hard to figure out what’s going on.

The same thing is true in the geospatial world. If you can’t plot a map, it can be hard to reason what’s going on and to take the next steps. That’s the main missing piece. It’s just the fact that databases always form one component of a whole solution. Rarely are they the whole solution themselves.

CAN WE DO MOST SPATIAL THINGS IN SQL AND IN A RELATIONAL DATABASE?

Yes.

I like to talk about PostGIS as GIS without the GIS. The questions you ask analytically of a GIS are usually something like:

Given this layer, summarize values from this other layer with respect to the geometries in the primary layer.

Every GIS company has a term of art, like spatial join in ArcView, for putting together two layers based on a spatial relationship.

That’s the core idea of a database. It’s a join, and when you have spatial functions, you can do that join based on spatial conditions. It becomes a spatial join. Once you get that join, you can do other things. Chop up the results based on intersections or overlaps or take objects similar in one attribute and melt them together using a union. Find the nearest x to y for every y. These are all GIS questions.

The final step might be to put all this on the map. The best spatial database with the most functions for doing spatial analyses, and I say with a certain lack of humility, is the PostGIS engine.

HOW MANY SPATIAL FUNCTIONALITIES ARE THERE?

There are 700 functions defined in PostGIS, but many of those are duplicates of similar functionality. Some of them are backing index methods, so they do not interest the end-user. At this point, around a couple of hundred analytical functions.

CAN I EXTEND THOSE FUNCTIONS? AND WRITE MY OWN INTO THE DATABASE?

You can, and you can choose your language. Postgres supports Python, TCL, R, and almost every “scripting language.”

The most effective and inbuilt language is called the PL/pgSQL, analogous to Oracle’s PL/SQL. Users contributed many incredibly complex pieces of functionality in PL/SQL. The concave hull implementation in PostGIS was contributed by a power user and written entirely using PostGIS core functions and PL/pgSQL.

CAN I SCHEDULE TASKS IN THE DATABASE?

Yes, there is an extension to Postgres called pg_cron that lets you do inside-the-database scheduling.

IS IT POSSIBLE TO EXECUTE FUNCTIONS OUTSIDE OF THE DATABASE?

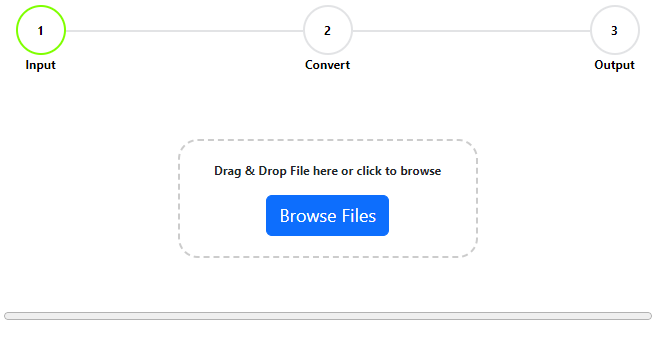

You can have a SQL script that you run using an external scheduler. The most common one is to write your logic and SQL outside of the database, knowing that all the processing is going to happen inside the database, and turn it over with some external scripting language. You end up with a five-line Python script:

Step 1: connect the database

Step 2: run this query

Step 3: take the results, save them somewhere else, and run that at some regular interval.

That’s the way you end up doing running functions outside the database.

WHY DO PYTHON AND R OVERSHADOW SQL THEN?

It’s because of the third versus fourth-generation language thing.

People get used to procedural languages and their own ways of solving problems. They take result sets, sets of rows, and then iterate on them and figure out the answer in their language, which they understand.

You don’t need to know a lot of SQL to get access to the data in a database. You just need to know SELECT * from table at the lowest common level. You can build everything you want in your external language of choice and still use the corporate database. You feel you’re using the corporate source of truth. But you’re doing the work inefficiently for execution and calculation. Either way, you’ll get your answers.

The bar of entry into SQL is extremely low. People will often step over that bar and then think they’re done, and they know enough SQL to finish the job. That’s one reason you see the primacy of execution environments like R, Python, Java, JavaScript, or whatever.

It takes a while for folks to learn SQL. If they’re not given training or have someone in their organization who can tell them how to do things in SQL 10 times faster, they won’t know. It’s also hard because some of the core, most powerful aspects of SQL are the hardest to understand if you’re used to thinking in an iterative third-generation way.

Like table joins.

They’re expressed in two lines, but they encompass in the execution engine a great deal of complexity. The reasoning around that can be challenging for people to get to initially. But once they do, they never go back.

DO YOU LEARN SQL OR SPATIAL FIRST?

To get the full power of it, learn SQL first and move on to spatial once you’ve got the core concepts of SQL. You don’t need the extra complication.

When you’re learning something abstract like joins, you just need to understand what a join is, what the different types of joins are, and how problems, which you might ordinarily cast as iterations, can be cast as set operations and joins.

It’s 2-4 days out of your life to work your way through an introductory SQL course that will cover those things. Start with the basic core part because that’s the harder part to get over. Then add in the spatial side.

When you come from a geospatial background, you already have the base knowledge of what layers are, how features work, and Tobler’s law of near things being related to each other. Even the concept of joining two layers in the spatial condition is something you get already.

DBAs start in a completely different place. They need to be explained that this is GIS, this is a layer. They already understand the logic in SQL.

WHAT MISTAKES ARE PEOPLE MAKING WHEN EXECUTING OR LEARNING SQL?

Classic mistake no 1 is reaching for the buffer function when they want to reach for a distance radius.

(Ironically, the other thing it answers is if you cast a solution in terms of make a buffer, then find the things that touch it, or you do find me the things that are within a distance.)

You get those answers at a huge performance penalty.

It’s a common mistake because geospatial people have been trained to verbalize the question of find me all the stuff near this other thing by using the word buffer. They naturally reach for it as a SQL function, but it’s an expensive SQL function.

Another common mistake is doing unconstrained joins and then filtering them poorly, just because folks haven’t figured out joins yet. That goes away with a bit of experience.

You just need to have a deep understanding of what’s happening when you push the button.

There’s no easy way to get around it. You get the right answer both ways. One of them is fast, and one isn’t. Documentation, training, or going to PostGIS workshops can be useful. There, we explain where the problem is and how you should solve it most efficiently.

Then, there are developer habits that are hard to break. Thinking in terms of iterators, building SQL thinking in terms of not set composition and filtering, but in lists of IDs between different execution frames. Thinking joins can be hard.

ISN’T THIS COMPLICATED WHEN MOVING ACROSS ENVIRONMENTS?

No. The world of computational geometry is mathematical, and it’s shared amongst the environments. Things like the buffer solution are bad ideas in almost every environment. Better things, like learning how to do set reasoning and SQL reasoning, transfer cleanly between environments.

If you get good at SQL practicing on PostGIS and Postgres, the goodness will transfer cleanly to SQL Server in Oracle. SQL is underappreciated. It’s a portable skill across different enterprises and organizations because regardless of what they’ve standardized on, it’s almost certainly going to speak SQL.

Unless you’re unlucky, and they’re standardized on something like MongoDB. In which case, you should probably quit or not take the job.

ARE POSTGRES AND POSTGIS THREATENED BY THE RISE OF NOSQL DATABASES?

Curiously, even less so than databases in general. NoSQL databases are weaker on the analytical side.

That was the case with early MongoDB. To some extent, the current MongoDB is still focused on document transactions, and pushing against the online transactional processing spine of the relational database space, and not against the analytical processing side at all. Analytics are bolted on as an afterthought.

People who use PostGIS as an analytical engine within a transactional context are not worried about NoSQL.

The reality of the NoSQL movement is that whatever perceived advantages those environments had, they’ve largely filled up the niches they could fill. There’s a continuum of utility between tools. The NoSQL databases fit at the far end of that continuum when you have specific use cases that require these distinctive features that only NoSQL databases have. Like being able to handle unconstrained write load, or easy sharding and clustering.

When you have these problems at scale, you have no choice but to use a NoSQL database. And the reason why no choice matters is that what you’re giving up when you do that is a lot of the ad hoc analytical capabilities that the relational model gets for free.

There’s this Goldilocks chain of goodness for data management. Let me explain.

At the low end, the baby bear, you’ve got desktop environments like Tableau, ArcGIS, or R. They’re incredibly flexible; you can do all kinds of cool analyses with them. But when it is time to put those analyses into production, stacking them up on the servers is a brittle solution and doesn’t work well.

In the middle, the medium bear happy times, is the SQL environment with relational tables. It can scale from things that run on your laptop to things that run in massive data centers, from single nodes to multi nodes. It still gives you the query flexibility you lack when you move to a NoSQL environment where you have this data store that can handle large volumes of throughput or transaction but requires you to write query logic efficiently yourself.

That’s not good for when you’re exploring the situation. It could only work when you have the data flow nailed down precisely.

Do you want to give up the flexibility to handle the scale?

There are places for NoSQL databases, but probably 90% of the people don’t live in those places.

WHAT IS YOUR ALL-TIME FAVORITE POSTGRES OR POSTGIS FUNCTION?

It’s ST_Intersects because it is at the core of almost every spatial join.

The amount of GIS we can do just with ST_Intersects is insane. I also love it because, at the implementation level, it has layers and layers. Because it gets used so much, we had this impulse to make it more efficient. Each step of making it more efficient has involved, for my purposes, learning more about the core execution engine in Postgres. Doing cool tricks with our internal handling of the objects so you can quickly carry out the intersects calculation, particularly in the context of joins.

It’s been the gift that keeps on giving because it’s useful. It’s been a wonderfully intellectual journey to learn how we can make it faster and more efficient, and how it works in the Postgres environment.

With the next release of PostGIS 3.1 this fall, for certain everyday use cases, like where you’re joining a table of fairly large objects against another table, we’ve managed to sneak in yet another performance optimization. It will make things faster by 20 to 30 times. It’s going to be radical.

WHAT WOULD YOU LIKE TO SEE ADDED?

There’s an entire list of analytical stuff I have on my wish list. I keep pushing GIS with other GIS. That means I’ve got to fill in the functional pieces that GIS systems offer that we don’t have yet.

The one that I missed—because I keep running into exciting and attractive analytical cases that require it—are surface interpolation. Given a set of points that have elevations or have measurements on them, either compute a best fit surface for them or do creaking so you can have a statistically valid surface for them. Once you have that surface, you can drape things on it or generate isolines.

Surface interpolation opens up this panorama of other possible analytical things that people can do. A core piece, a lot like intersects, opens up all sorts of analytical possibilities.

WHEN CAN WE SEE THIS LIVE?

It will probably be a 3.2 thing in about 12 months.

It was meant to be for 3.1, but we had to rewrite the overlay calculations in the underlying computational geometry engine for PostGIS. With that needing to land in the fall, at the same time as 3.1 comes out, there was no time to come up with other cool stuff.

WHAT’S YOUR ONE MESSAGE TO THE GIS COMMUNITY?

The geospatial community runs five to ten years slower than the broader technology community. We can learn from what they’re going through.

I see, in both small and large companies, a significant focus on the coolest, groundbreaking technology around turning imagery collection from a “we’re collecting pixels” problem to a “we’re extracting features and real-time knowledge of the world” problem.

That stokes me up no end.

It’s technologically super exciting. Yet, it’s another brick in a technological wall that we see being built by our counterparts in consumer technology. Open your phone and look at your Google Maps and realize that Google knows so much about you. That’s scary.

Things are happening in real and scary ways in other jurisdictions right now and—who knows to what extent—in the jurisdiction we live in. Take the Uyghurs in northwestern China. This is a population of 11 million people. A minority of China, but a majority in the region, living in an open-air prison mediated by technology we’re 100% familiar with because we live it every day in our consumer technology.

It’s migrated over and is used in ways which the people who invented it and promulgated it, back here in the West, would rightly consider terrifying. We’re doing really cool stuff, and there is no doubt that the same stuff will be used in alarming ways.

We have to think about how not just to do the cool stuff, but also how to use the cool knowledge we have to strengthen the civic society, and institutions we have in our area so it isn’t used in terrifying ways.

Hopefully, we can dissuade people in other territories from using our work in this way. That means taking our heads out of the keyboards and the technology and thinking about the political ramifications of what we’re doing, and how we can mitigate it.

I recognize that there are no simple solutions to how to do this. But if we don’t think about it at all, it’s guaranteed we’ll do nothing.

You can find out what Paul is up to on Twitter. I hope he’s sparked enough interest in some of you to explore SQL and to learn a skill that’s transferable across databases and apply it to your geo problems.