Julien Rebetez is a software engineer and a machine learning expert at Picterra. He’s developing a build your own object detection AI using aerial and satellite imagery to run against various data types.

Julien started in geospatial a decade ago when he was doing his thesis on deforestation detection from low-resolution satellite imagery. He explored machine learning because he was looking at images classifying deforestation. Later, he worked with deep learning, which he applied to agricultural drone imagery classifying crop types.

At Picterra, he’s been leading the machine learning and software engineering efforts. They make machine learning work on a vast array of data; GeoTIFF, Web Map Services, RGB, infrared, and digital surface weather. Low-code/no-code for people who don’t need to know about the hows of machine learning to carry out object detection.

WHAT KIND OF SPATIAL DATA CAN I USE TO CREATE MY OBJECT DETECTORS?

You can use image files from your hard drive—usually in GeoTIFF format—from a satellite image provider.

You can also use streaming data by connecting a server, like Web Map Service (WMS), to the Picterra platform and build your own machine learning method on it.

XYZ data processing is another possibility; it’s a popular format for Web Map.

Depending on where the data came from, Picterra supports a wide range of sensor types, such as drone, aerial, or satellite data.

IS THIS JUST FOR RGB BANDS?

The platform is built around visualizing and processing the three bands because that’s what we’re used to looking at. But doing a false-color image is possible.

Instead of having RGB, you can have RG and a near-infrared band instead of the blue. It’s a false-color image you can upload to the platform and train the detector on. It’s not limited to visual data.

You can also make a composite image with some visible bands. The last band can be a digital surface matter, such as with processing elevation data.

WHAT IS DETECTION?

Identifying and locating objects in an image, or automatically extracting geospatial information from an image.

You train a detector by teaching the machine learning model what you’re looking for by giving it examples. You give examples and see how it does. If it doesn’t do well, you give it more examples. You iterate until you get good enough results.

ARE YOU DRAWING BOUNDING BOXES OR DETAILED POLYGONS AROUND THE OBJECTS?

You don’t need to go pixel-perfect, but you need detailed polygons for the model to output polygons.

Segmentation and per-pixel classification are what GIS software uses. Picterra uses deep learning under the hood. The model will not only look at the pixel, but the context—the adjacent signatures—too. You’ll get much higher accuracy than with the per-pixel approach, where there’s often a lot of noise in the investigation output.

In deep learning, you give the machine learning model freedom to pick whatever seems to make more sense for the object you’re trying to detect. It’s still an academic research topic, and we don’t have all the answers.

What is the model learning and looking at first?

It’s probably looking in stages, and the first stage is edges or sharp differences in contrast or region. Perhaps a building has a roof with a gap between the roof and the ground, surrounded by grass.

The model looks at these high-level features and combines them into shapes. It’s going to look for stuff that’s square because buildings usually are, and there’s a particular size associated with them. As you go deeper into the model, it’s building a better understanding of the object you want to detect.

SO WE’RE STREAMING DATA AND WE’RE LABELING OBJECTS. THEN WHAT HAPPENS?

If you’re doing the labeling in QGIS or ArcGIS, there are two ways to get this data into the platform.

One is through plugins, which are still in development and in beta. Or you can directly upload JSON Vector data as training.

You don’t have to redraw everything once on the platform. If you already have building outlines for a city, you can reuse your existing data to train a detector.

I have a Web Map Service (WMS) for Paris, very detailed data—aerial RGB imagery. I downloaded all the building polygons for Paris from OpenStreetMap.

Can I use this to select the training data for the detector’s machine learning based on this web map service?

Technically, yes.

You need to turn the OpenStreetMap data into GeoJSON or write a script to upload it to Picterra. There’s also an API to write a script using Picterra and then connect the WMS to Picterra.

Now you’re able to train the detector from the WMS from Paris and OpenStreetMap data that you ingested in Picterra.

OpenStreetMap may have legal constraints on what you can do with their data, so be sure to check before doing this.

SOUNDS LIKE A LOT OF PREP WORK. HOW CAN WE SPEED UP THE PROCESS?

In many countries globally, people have access to open WMS and cadastral GIS data, which is aerial data, and you can ingest it into Picterra. It’s a great way to get started if you want to train a detector on buildings or something in cadastral data.

WHAT’S THE TRAINING PROCESS LIKE?

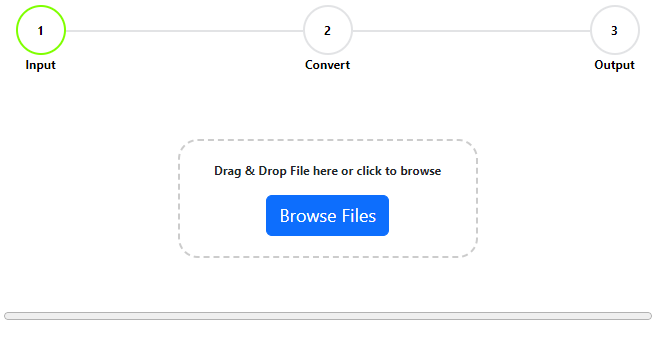

The platform has an easy UI to allow you to become your own machine learning engineer.

Define the training data by adding imagery or accuracy areas—areas you need to annotate and label. At the end of the training, you get a score on how good the detector is. You evaluate the accuracy of the detector as you train it.

Upload the first set of training data—or draw it. Train the detector and check what the accuracy is in the accuracy areas. From there, you annotate and iterate a few more rounds until you get good results.

This is the basic workflow of training a detector.

CAN I ADD GEOGRAPHICAL CONSTRAINTS? I’M ONLY INTERESTED IN CERTAIN AREAS, NOT OTHERS.

It’s possible at the time of detection.

Setting detection areas is a way to constrain the detector to only look into a polygon you specify.

Suppose you want to detect solar panels on buildings. You would train a solar panel detector, but you would use the building footprint as the detection area. The detector will only look for solar panels that are within a building footprint.

This makes your life easier in two ways. You don’t have to make the model work for solar panels outside of buildings, such as roads. Plus, you reduce the amount of data the detector needs to go through, saving you time looking at mountains or farms.

IS POLLING BUILT INTO THE DETECTION OR I NEED TO THINK ABOUT IT?

It’s built in.

A lot of engineering effort went into getting the WMS and the processing right.

The complexity of detecting on WMS is completely hidden from the user. Multiple machines are pulling data from the WNS to make everything faster—in Berlin. If you need to retrain the detector on the WMS, you don’t have to re-fetch the whole thing again; just use our local cache to make it faster.

All you see is a Run button on the UI. The rest is working in the background and you only know when it’s done.

THIS CHANGES THE WAY WE THINK ABOUT AERIAL IMAGERY PRESENTED AS A WEB MAP SERVICE

It’s new for many. WMS is an image and is usually used for visualization, not for processing. It’s even new for satellite image providers and their licensing models based on access as a GeoTIFF.

TELL ME ABOUT THE SLURRY TANKS IN DENMARK

At the end of 2019, a Danish company called SEGES had a problem and a tight deadline to solve it.

They are consultants for farmers and the government and needed to estimate ammonia emission from slurry tanks in Denmark. Around 35,000 of them in the space of a month.

There was no map of slurry tanks. They aren’t normally mapped or appear on the cadastre.

We started with WMS aerial imagery for Denmark, hoping we can detect the tanks and make an estimate on how much slurry they contain and calculate the ammonia emission.

We connected the WMS to the platform and trained the detector. Multiple detectors, to be precise because slurry tanks can be open or have a roof. We started with 20 annotations and that worked fine. After another few rounds of iterations and 57 annotations, we got excellent results.

Why so few annotations? Because the shape of a slurry tank is simple and there aren’t many similar objects near farms. They’re unique objects in the given detection area.

Using detection areas work. SEGES circled each farm to make a detection area, saving time by not having to detect in cities or countryside not containing farms. The result was 1 terabyte of imagery data, which ran for a day.

CAN I TAKE THE DANISH MODEL OVER TO CANADA?

Probably. It depends on the quality of the imagery, as well.

Denmark has excellent imagery—uniform and cleaned. If I were to move the model to Canada, I would first connect the Canadian WMS and do training to detect on a few areas to get a feeling.

Is it working well, or is it a complete failure?

Depending on what you get, you generalize the detector or apply it to a different image. You add more training data specific to the new region. By all means, keep the Danish training data because it’s useful. But by adding a few more examples of slurry tanks in Canada, the detector learns about the diversity and manages the difference in image quality, if there’s any.

CAN YOU COPY PASTE A DETECTOR?

Yes and then modify it to serve a new project.

Think of Picterra as your experimental workbench. It’s where you’d keep your detectors and run experiments on them. Copy, modify, or add training data to them from different sources. Train the detectors on different data and data sources to evaluate what’s best for what you need to do.

Machine learning engineers spend their days doing this. here you experiment and find the best model with no coding experience and not studying machine learning engineering for years.

WHAT DO WE GET OUT OF THE MODEL?

Once you run the detector, you get detailed polygons. There’s also a detector setting to extract bounding boxes instead of polygons if you need to.

Then you can either export this detection as a shapefile, GeoJSON, KML, or CSV. The polygons have a few attributes—the area and the perimeter of the polygon. You can open the exported files in QGIS or ArcGIS and carry on with your GIS processing operation.

There’s also a feature to generate reports that are a shared web map—a link to a map visualization of the output data. You can share this link with clients or colleagues so they can visualize the detection and get the statistics.

ARE THERE PLUGINS FOR THIS?

The plugins are in the early stages.

With a few clicks, you can upload data directly from your plugin from QGIS or ArcGIS to Picterra. This will speed up your workflow. Then detect and get results as a new layer in your GIS software.

WHAT ABOUT THE DETECTION PROCESS?

You can’t add the WMS from the GIS plugins directly just yet. That’s something that we have on our roadmap for the plugins.

You need to do the setup on our web UI. Once it’s set up on Picterra, all the processing happens in the cloud. When you start the detector from the plugins, detection, processing, and data fetching happen on our servers in the cloud. You can close your GIS software, shut down your computer for the night, and come back in the morning to your results.

WHAT WOULD YOU NOT USE THIS FOR?

It’s tough to say without trying.

The difficulty comes when looking at multiple instances of the same object. Suppose you can see that they can have very different appearances. In that case, it’s probably going to be tricky for the detector to detect them. For example, buildings are both easy and hard, depending on what you want to do.

You can do very well with machine learning for buildings in a city. But let’s say you train a building detector on Switzerland’s cities. Then you apply it in Africa or in Southeast Asia. The buildings are entirely different. The detector will fail to detect buildings.

Also, the detector doesn’t understand the world as we do. It doesn’t embed the constraint that we have about the world, such as that a building is usually located close to a road. We need to teach the detector our human reasoning.

WHAT ABOUT THINGS WITH CHANGING SHAPES? LIKE TREES?

On Picterra, we work with two broad types of detectors.

Count detectors are for counting digital objects. Segmentation detectors are for mapping areas.

Things like vegetation and trees come under the segmentation bucket. Unless you need to count the individual trees, you’ll use the segmentation detectors to map an area covered by a forest.

Picterra is a game-changer. WMS has been restrictive on what users can do with the image. But now, you can get access to the data. Imagine what use cases and businesses we can build around this.

After the recording, Julien let me in on a secret. Picterra uses code from MapProxy to pull these Web Map Services. MapProxy is a Python-based free open source software. It’s a great tool with a ton of functionality, and it’s so worth checking out.