Peter Atalla is the CEO of VoxelMaps, and his latest project is nothing short of a miracle. He’s using voxels to build a 4D volumetric digital twin of the Planet.

How did that even come about? He started Navmii — one of the first navigation companies for the automotive industry 17 years ago. Their mobile app had 30 million users.

In the last decade, Navmii moved over from navigation to building maps and covered 180 countries in the process.

Maps for machines and building a model of the entire Planet came naturally from that. But he’s not building just any model.

He’s building a 4D volumetric one.

WHAT IS A VOXEL?

It’s a 3D volumetric pixel.

A cube.

But voxels are nothing new. They’ve been used extensively in two key areas within computing.

Computer games render worlds and use voxels instead of polygons. Minecraft is a good example — it’s a voxel rendered world. Gaming companies love voxels for their multi-resolution capability over polygons.

Robotics uses voxels for image processing to reduce the size of LIDAR point clouds and to create small dynamic maps — or what we call VOG (Voxel Occupancy Grid) — for robots.

VOG is a 3D grid of voxels, and it’s typically used for small robots that scan a room and produce a basic 3D map of it to navigate it.

VoxelMaps took that concept and applied it on a planetary level and used MRVOG (Multi-Resolution Voxel Occupancy Grid) — we placed a giant mega voxel, or a cube, on the Planet. The cube is full of multi-resolution voxels, which can be any size, but we use eight-meter to one-centimeter voxels for our purposes.

This virtual framework, or construct, goes through the whole of the Earth. Indoor, outdoor, subterranean, and through the oceans. It’s an Earth referenced and Earth fixed model.

If you had a 2D map, you’d have grid lines on it — but a grid without information in it. Each of the voxels has a permanent position and a unique address in space, but it’s empty.

The occupancy status of the voxel needs to be validated:

Is it free? Is there something in it?

We use sensors for doing this, LIDAR being the main one. We take a LIDAR, fire the laser beam, and pass it through the environment. If it goes through a voxel and it’s not reflected on anything, the space is labeled as “free.” Most of the Planet on the surface level is free space.

When the laser beam is reflected off something, we collapse down to the smallest unit of the voxel, and it’s marked “occupied.”

We etch out the Planet’s matter and create a dense 3D volumetric model of everything that’s there. When the occupancy is done, it gives us the shape of everything.

We can then layer additional surface information on the voxels. Once the data from cameras, RGB values, hyperspectral imagery, or radar are placed on, AI models look at the objects — the collection of voxels in 3D with the surface data — to understand what we see.

We can automatically recognize features and extract meaning, attributes, and measurements to very high degrees of accuracy.

Is this 3D? or 4D? Do you consider time?

Time is a key element.

Each voxel has an infinite number of time states. We do multiple passes of collecting data. When we use AI to recognize these 3D structures, AI also looks at the time series and detects things that changed in the past.

The extra dimension of time is a unique feature of the model.

DO VOXELS UNDERSTAND TOPOLOGY? OR IS TOPOLOGY BUILT INTO THE MODEL?

It’s built into the model.

As we measure and etch out matter, we end up with a wealth of information for the shapes, measurements, and other data. AI looks at the measurements and the additional information and extracts the meaning that’s required.

WHAT DOES AN EARTH-CENTERED AND EARTH-FIXED COORDINATE SYSTEM MEAN?

The model will work indoors, outdoors, underground, below sea level, and as we travel through the atmosphere.

We’re building a complete model of everything, indoors and outdoors, that we’ll be able to see, measure, and extract insight and meaning from.

A complete model of everything is like a God Mode map with a special cheat code gamers love…

HOW CAN WE GET THE DATA OUT AND USE IT TO MATCH OTHER DATA?

The model itself almost becomes a data abstraction layer. We collect and store the data — absolutely everything — in this voxel occupancy grid. We don’t try to get rid of any data to produce this model.

Depending on the use case, people might choose to use the data for 3D or 4D analysis directly on the platform in voxels. For some, this could replace the need for various tools.

But if needed, it’s easy to export the data in various formats to use in different toolsets. Formats like shapefiles or NDS for a high-definition autonomous vehicle map, or even just exporting them as points to a system or platform.

It’s a global, persistent spatial database. There’s a lot that people can do within the platform, which is probably a lot more powerful than existing toolsets.

EVERY MACHINE WILL NEED ACCESS TO CERTAIN PIECES OF THIS MODEL AT SOME STAGE…

The requirement for machines is a much more detailed map. IoT and various AI forms need to extract information from the environment and forms of autonomy for autonomous vehicles, automated delivery robots, miniature robots within a building, or drones.

This accurate-to-1-cm 4D model differs significantly from the traditional maps we’re used to using. That doesn’t mean the data wouldn’t be useful to humans — it just means that there’s probably a lot of data that would be first utilized by a machine to provide them with insights in a human context.

HOW DOES COLLECTING “ABSOLUTELY EVERYTHING” HAPPEN?

For cities, we create a mobile mapping system. It’s a collection of sensors like high-accuracy LIDAR, 360-camera imagery with a high megapixel count, plus high-accuracy GPS information, corrected.

We drive these sensors through cities, and they collect everything they see. Everything the LIDAR touches or the cameras see is built into the model.

The sensors on the top of vehicles collect information and fuse it together in a voxel stream. That’s pushed onto our cloud and merged. It’s checked and cleaned to ensure there are no duplicates, and a final voxel product is born.

For areas cars can’t get to, we use people to collect the information. They carry backpacks with LIDAR and cameras. They walk into buildings, shopping centers, and parking garages to cover them all.

On top of this, we use aerial LIDAR. We fly helicopters or other craft above the city and collect aerial LIDAR and aerial imagery.

All this is then fused together within the voxel pipeline to create a final output and model.

There are so many possibilities with this, including ocean mapping. Right now, the first use cases are around cities, but it will expand into indoor mapping and other areas, like science.

CAN THIS MODEL BE USED WITH EXISTING DATASETS?

In certain areas, yes, but there are considerations around it.

Just because a dataset exists, like a LIDAR point cloud of an area, it doesn’t mean that it’s of a quality that can produce the model.

If we really want to get down to one centimeter or less in resolution, the quality of data needs to be high. The time when this data is collected is also significant to us.

Provided that we can have the input information, we can store it.

Voxels can provide the attributes for where that data came from. We can have various providers supply that data, not just ourselves. If it’s not sufficient resolution to get down to one centimeter, the multi-resolution voxel model can store coarse data within the same model and visualize it at certain levels.

There’s flexibility for how the data is ingested.

We already know how to map cities. The real challenge is around indoor space data.

For indoor spaces, there’s only so much we can do as a company. It makes sense for us to focus on commercial spaces and public areas where the use cases are straightforward.

Still, there are many use cases for individuals, too. Obviously, we can’t go into homes or offices to map. That’s why we provide the ability for people to upload their own data onto the platform from a high- quality LIDAR sensor or a smartphone with LIDAR on it.

There’ll be both a professional side of the data collection and a crowdsourced side. And the use cases might be slightly different for both types, but they can all exist within this model.

HOW DO YOU IDENTIFY OBJECTS?

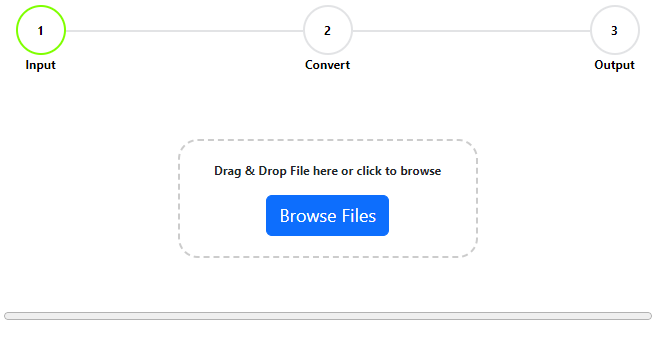

First, we collect and import the data, followed by a pipeline to convert and fuse it together and create a voxel digital twin.

Then, we either have people going through that to measure and recognize things or get AI to do it.

Enter the VAMS platform.

It’s a collection of neural networks that do full 3D semantic segmentation. It’s not just 2D imagery with some LIDAR points overlaid, but it looks at objects entirely in 3D. We train and annotate initially in 3D and this results in high levels of object recognition.

At the moment, the platform recognizes around 200 different objects within cities, and we’re working on the same for indoor spaces — offices, malls, and public locations. We’re continually expanding the AI’s ability to recognize various features.

But again, it’s not just the recognition that we’re after — it’s to automatically pull out measurements and attributes on top of recognition.

We constantly add new features and extractions because as the data grows, we need to get the AI to do the heavy lifting and provide the insight.

Trying to use humans to do it is not scalable.

ARE YOU CONTINUOUSLY POPULATING THIS MODEL?

Yes.

And one of the great things is that you don’t need to remap again. For time-based data, that’s really useful because you’ve already captured everything in the environment. If you need to extract any objects or features or you want to do measurements in CAD, it’s a simple case of training the AI agent to do that for you.

That’s a quick process. Typically, around a week. Up to a month if it’s something complicated. The AI can just run straight through the model or a particular area that you’ve assigned to it and pull back the information for you.

DO FEATURES GET TAGGED WITH SPECIFIC ATTRIBUTES? DO THEY KNOW THEY ARE TREES?

They do. All voxels that belong to an object have an attribute for that as well.

DO YOU FILTER DATA AT THE COLLECTION?

We don’t filter at the time of collection — deliberately.

The collection is messy, yes. Cars, pedestrians, cyclists, and leaves blowing in the air. Those are extracted using deep neural networks. They’re not extracted using more naïve approaches, such as trying to detect movement and then ignoring that data.

Implementing this is useful because you get much better-quality data. It also allows the reconstruction of scenes where data might have been missing.

The AI plays a critical role in recognizing the features and tidying the data to an optimal state.

WHAT’S A GREAT USE CASE FOR THIS?

High-definition maps for different autonomous vehicles — autonomous cars, delivery robots, or other robots.

Using the model, we extract the required features. For a lane model, that’d be semantic information such as traffic signs, road signs, etc. We also provide a localization framework and create localization maps from voxels. This allows the robot to understand where they are in the environment and to position themselves.

This can work from a pure VPS (Visual Positioning System) that could be camera only, all the way to LIDAR or a radar-based solution.

This is the high-tech future market end that’s already been implemented for the automotive industry.

For 5G, it’s taking over the standard survey stuff.

Often, when infrastructure is put in for networks, such as small cells, there needs to be an audit for space availability on telegraph poles or streetlights within a city. Whoever owns that infrastructure leases it out.

Once availability is confirmed, the telcos will look at where they can put their sensors. But that isn’t just the case of a spare place to put a sensor. It’s also about the optimal placement of the sensors.

Somebody would do a survey of the location to understand what’s in front of it and work out whether this is a suitable location for the sensor. This can be expensive.

All of this can be fully automated. What would take hundreds of people to do can be done with a few vehicles collecting the model. The AI doesn’t just recognize the space to put the sensors but also understands what will happen with the 5G signal. We can model the 5G signal as a voxel cloud within the environment.

We can see how the environment affects the propagation of the signals, both outdoors but, more importantly, indoors as well.

We’re taking the models inside.

This is the first time we’ve seen anything like this. 3D is already powerful. But having that 4D time-based aspect is helpful to understand how things change over time, depending on where you put the sensors or how that environment changes.

CAN I MAKE GLOBAL WEATHER MAPS?

How you model the input data is key.

We can model the physicality of the environments and the shapes of everything else. The variable data of a non-physical thing depends on the accuracy of the sensor collecting that information.

For 5G, we can do this in a relatively good way in 3D and get valuable data to plot and see how the 3D environment affects it.

For weather data, we rely on the type of sensors used for collection and the information’s density. The higher the quality of the data, the better the model — which is challenging to get with flat 2D time data.

Dynamic models are possible, assuming we have access to the data needed.

Taking the entire Planet and converting it to a voxel grid lets you discretize the environment. You can then model things that pass through it, build on it, or grow from it — all in a volumetric form closest to our reality. We don’t live in a world of 3D meshes — surfaces with no inherent data.

To perform calculations for various industries and areas of research, we need to use more volumetric data.

WHAT’S THE BIGGEST ROADBLOCK FOR 3D PROJECTS?

The magnitude of the data and the ability to process it.

When we first considered this model four years ago, we couldn’t imagine processing this amount of data at scale.

The vast majority of people view 3D as a file that needs to be loaded into an application to use it. In reality, 3D needs to be volumetric and global. You should be able to do calculations and analysis on the whole dataset. But to do that, you need an incredible amount of storage and processing power — both of which are workable now.

COULD THIS BE THE LAST DATA MODEL WE NEED FOR GEOSPATIAL?

There are always use cases where voxels aren’t necessarily the optimal way of doing things.

Voxels work as data abstraction. They allow you to collect and store everything, but then exporting it into different formats is useful.

One issue with voxels is the size of data you need to store — although there are many clever ways to optimize it.

Some use cases, for example, building a map for autonomous vehicles and drones, don’t need a full copy of the voxel map to process. They need a sub map created from it, which is much more useful for processing power.

There is an immense value to using the voxel approach. Volumetric is going to become big, particularly as the gaming industry is getting behind it. The nice thing with the multi-resolution approach is you don’t have a limit on the resolution. There’s no reason you can’t do one-millimeter voxels.

Theoretically, it’s possible. There are constraints for processing time and the quality of the sensors creating the data.

A lot of future-proofing is built into a model like this.

IS THIS CREATIVE DESTRUCTION? WHAT TECHNOLOGY IS THIS MODEL REPLACING?

A lot of analysis in geospatial is 2D — for simplicity and ease.

But looking at things in 3D would be much more valuable. Standard concepts like layers of relatively basic data overlaid onto a 2D or 2.5D map will be replaced and there’ll be easier ways of doing things.

The data will become a spatial database you can search for information. Plotting areas and extracting layers manually in the old way of doing things will probably be replaced with some form of intelligence search that will be geospatial and volumetric, and it will pull the data back.

Up to 90% of the traditional geospatial tools most people use could be replaced or improved in a 3D model.

THIS IS PERFECT FOR AUGMENTED REALITY IDEAS…

We already have models working for VR and for AR.

Virtual reality is more of a traditional exploration of the data survey, like virtual surveys.

The Augmented Reality applications are interesting, both for positioning of content and looking at new consumer models.

You might want to create a structure, which would map your internal spaces, and which would be secured and private to you — needing permission to access it. This would allow various businesses to sell or supply services to you.

Virtual surveys or virtual carpet installations come to mind. Suppliers could have a look at the inside of your house and measure up without even having to be there. There is also augmented reality for objects you might want to buy and have in your home, like couches or curtains.

This is already happening and we have a few demos. Interestingly, the AR piece is not dissimilar to the robotics stuff we talked about for localization. Similar technologies in the visual positioning systems are used to localize data to this high accuracy.

WHAT MAKES YOU THINK YOU’RE THE RIGHT PERSON TO BRING THIS IDEA TO THE WORLD?

My extensive background in building maps of the world has stood me in good stead for thinking about this.

We build maps at a large scale for ourselves and for our clients. We collect millions of kilometers of data every year around the Planet. We know what we’re doing. We’ve been doing this for a long time, building it up.

That said, mapping the entire Planet is a huge ask. Even companies like Google struggle to do it entirely by themselves for the data that can be collected.

We need to expand the collection.

What’s important to us is not only collected by our sensors and teams but also by the crowd, other companies, and organizations. They can collect and contribute data to the project. We’ll be opening up an online platform later this year, which will allow people to do that.

That’s quite exciting for us.

WHAT DO YOU THINK NEEDS TO BE IN PLACE FIRST BEFORE IMPLEMENTING A BIG IDEA?

It depends on what they’re trying to achieve.

There are outstanding technical solutions to a lot of problems out there. Look further down the line to see who will use the data and what their needs, wants, and problems are.

That can include future-gazing.

In our case, you can see there are potential problems in the future that will need this 4D model. If we think about it, everything’s been virtualized, and we still don’t have a complete model of the entire world, so it’s inevitable that’s going to happen.

We’re here to be one of the key players in enabling this.

If you’ve got a big idea, and understand where you’re taking it, then go for it.

If it’s just at the idea stage where it might be hard to get people to buy in, your next step should be planning how to get people on board.

There is a lot to take in. I had to listen to the episode three times. I’m still walking around the house wondering if I’ve completely understood the concept, the implications, and the use cases of the model.

—————————

The terms VOG (Voxel Occupancy Grids) and MRVOG (Multi-resolution VOG) were new to me.

Could Mr. Vog be a distant relation to Mr. Sid?

Don’t quote me on that.